After Dave’s blog post put out the call for 50 visualizations using the new Audio API in Firefox 4 I decided it was time to stop thinking about learning processing and its .js version and time to start doing things with it. So I took a few hours on Saturday to poke at the code that Dave put up and after a lot of shots in the dark I came up with this but I was disappointed in how simple my results seemed after so much time spent. Today I went back to the book and learned a few more processing tricks which inspired me to hack on the audio visualizations again.

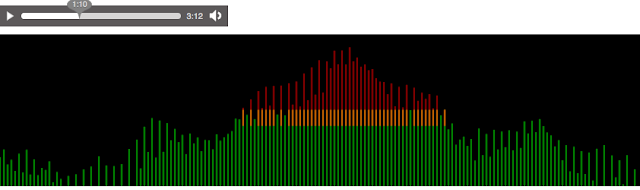

For my second attempt I really tried to understand more what the javascript functions were doing, how the actual audio data was being generated, and in what form it was being passed to the processing draw() function. I found a couple of pages on the Mozilla Developer Network that helped me understand the API a bit better. On my second attempt to visualize audio data I could really make it do what I wanted. The next round of learning I would like to do in this area is how to work with user interaction better. To respond with audio and video to user movements and key press events.

|

| Second attempt at audio visualization |

I’m really glad Dave put out the challenge, and that I made the time to teach myself something new.

Glad you found the Audio API info on MDN useful. Those pages were written just a few weeks ago by Sebastian Perez Vasseur at the MDN documentation sprint.